A face in 128 points

The Cuomo admin is touting an upgraded state DMV facial recognition system that launched earlier this year -- the admin says the system is intended to help catch fraud and identity theft, and it's prompted "more than 100 arrests and 900 open cases since it launched in January."

The Cuomo admin is touting an upgraded state DMV facial recognition system that launched earlier this year -- the admin says the system is intended to help catch fraud and identity theft, and it's prompted "more than 100 arrests and 900 open cases since it launched in January."

A clip from the press release that we thought was interesting:

The upgraded system increases the number of measurement points on the face from 64 to 128, doubling the number of measurement points mapped to each digitized driver photograph and vastly improving the system's ability to match a photograph to one already in the database. The system also allows for the ability to overlay images, invert colors, and convert images to black and white to better see scars and identifying features on the face. Different hair styles, glasses, and other features that change over time - including those that evolve as a subject ages - do not prevent the system from matching photographs. DMV will not issue a driver license or non-driver ID until the newly captured photograph is cleared through the facial recognition system.

Since the facial recognition technology was implemented in 2010, more than 3,800 individuals have been arrested for possessing multiple licenses. Additionally, more than 10,800 facial recognition cases have been solved administratively, without the need for an arrest. If the transactions are too old to pursue criminal prosecution, DMV is still able to hold subjects accountable by revoking licenses and moving all tickets, convictions, and crashes to the individual's true record.

The Cuomo admin says almost half of the people tagged so far are accused of using a stolen identity in order to get a new license because their old license has been suspended or revoked.

You can probably imagine a future where this sort of tech is more widespread. So while reports on the numbers "successful" cases is fine and good, it'll also be interesting -- in both a governmental watchdog sense and a tech sense -- to see reporting of the rate of false positives (that is, cases where the system says yes, fraudster, but it doesn't end up being true). Just because something's based on an algorithm doesn't it can't also sometimes be wrong or biased. [Motherboard]

In a future that includes all sorts of algorithmic-based screening and enforcement -- where we're all depending more on computers getting things right -- it'll be important to know how often the computers are getting it wrong, too.

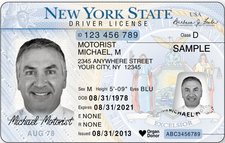

image: NYS DMV

... said KGB about Drawing: What's something that brought you joy this year?